高性能计算服务

>

命令行专区

>

常用技巧

salloc命令简介

该命令支持用户在提交作业前,先获取所需计算资源,用户可以ssh进入计算节点运行相关计算程序,当命令结束后释放分配的资源,常用作调试,常用命令选项同sbatch基本一致。

使用示例

[yuki@login02 ~]$ salloc -p kshcnormal -N 1 -n 4

salloc: Pending job allocation 47416155

salloc: job 47416155 queued and waiting for resources

salloc: job 47416155 has been allocated resources

salloc: Granted job allocation 47416155

salloc: Waiting for resource configuration

salloc: Nodes a02r1n14 are ready for job[yuki@login02 ~]$ ssh a02r1n14

[yuki@a02r1n14 ~]$ hostname

a02r1n14

[yuki@a02r1n14 ~]$ exit

logout

Connection to a02r1n14 closed.[yuki@login02 ~]$ exit

exit

salloc: Relinquishing job allocation 47416155

salloc: Job allocation 47416155 has been revoked.

[yuki@login02 ~]$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)为方便使用,集群通过modules工具统一管理环境变量。用户可以通过加载module,使用编译器(如GCC,INTEL等)、库函数(如mpi,数据库等)、显卡驱动(如cuda,rocm,dtk等)、物化材料软件(如lammps、cp2k)等高性能计算环境。

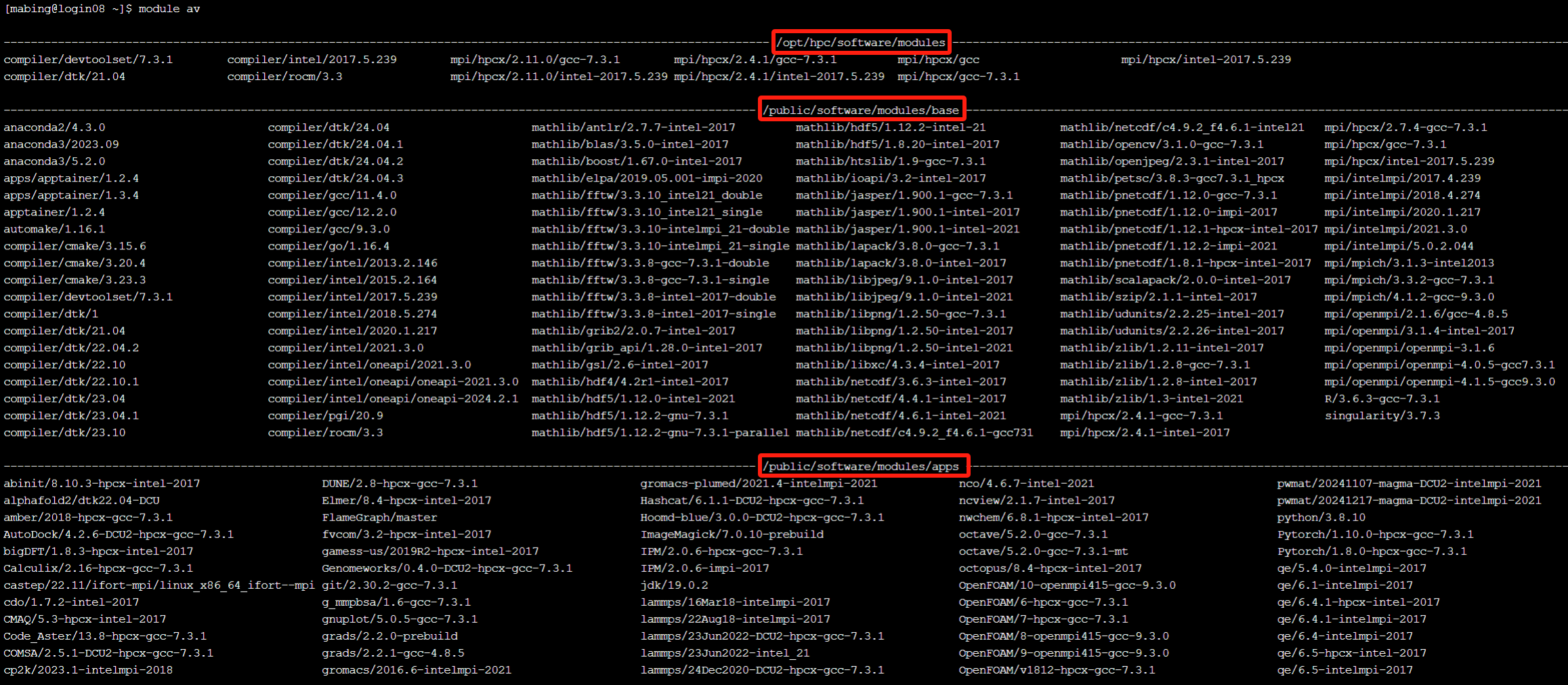

进入eshell命令行,执行module av查看集群所有预置软件,如图所示,/opt/hpc/software/modules里的软件部署在当前查询节点(login08);/public/software/modules/base里的软件部署在公共存储下,所有登录节点和所有计算节点均可访问。

常用的三款编译器GCC、INTEL、PGI均可通过module加载实现。

(1)GCC编译器

gnu是一套由GNU开发的编程语言编译器。它是一套以GPL及LGPL许可证所发行的自由软件,也是GNU计划的关键部分,亦是自由的类Unix及苹果电脑Mac OS X 操作系统的标准编译器。GCC(特别是其中的C语言编译器)也常被认为是跨平台编译器的事实标准。 GCC可处理C、C++、Fortran、Pascal、Objective-C、Java,以及Ada与其它语言。通常,compiler/devtoolset/7.3.1包含的GCC7.3.1编译器是用户进入eshell后的默认环境(执行module list)。当然,您也可以切换高版本GCC来满足编译需求。

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/dtk/22.04.2 2) compiler/devtoolset/7.3.1 3) mpi/hpcx/gcc-7.3.1

[mabing@login08 ~]$ which g++

/opt/rh/devtoolset-7/root/usr/bin/g++

[mabing@login08 ~]$ g++ --version

g++ (GCC) 7.3.1 20180303 (Red Hat 7.3.1-5)

Copyright (C) 2017 Free Software Foundation, Inc.

This is free software; see the source for copying conditions. There is NO

warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

[mabing@login08 ~]$ module purge

[mabing@login08 ~]$ module load compiler/gcc/9.3.0

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/gcc/9.3.0

[mabing@login08 ~]$ which g++

/public/software/compiler/gcc-9.3.0/bin/g++

[mabing@login08 ~]$ g++ --version

g++ (GCC) 9.3.0

Copyright (C) 2019 Free Software Foundation, Inc.

This is free software; see the source for copying conditions. There is NO

warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

[mabing@login08 ~]$| 编程语言 | 编译器调用名称 |

|---|---|

| C | gcc |

| C++ | g++ |

| Fortran77 | gfortran |

| Fortran90/95 | gfortran |

(2)INTEL编译器

Intel编译器是Intel公司发布的x86平台(IA32/INTEL64/IA64/MIC)编译器 产品,支持C/C++/Fortran编程语言。Intel编译器针对Intel处理器进行了专门优化,性能优异,在其它x86处理器平台上表现同样出色。

module提供多个版本intel编译器,compiler/intel/2017.5.239到compiler/intel/2021.3.0范围是常用的intel编译器版本。

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/gcc/9.3.0

[mabing@login08 ~]$ module purge

[mabing@login08 ~]$ module load compiler/intel/2017.5.239

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/intel/2017.5.239

[mabing@login08 ~]$ which icc

/opt/hpc/software/compiler/intel/intel-compiler-2017.5.239/bin/intel64/icc

[mabing@login08 ~]$ icc --version

icc (ICC) 17.0.5 20170817

Copyright (C) 1985-2017 Intel Corporation. All rights reserved.

[mabing@login08 ~]$| 编程语言 | 编译器调用名称 |

|---|---|

| C | icc |

| C++ | icpc |

| Fortran77 | ifort |

| Fortran90/95 | ifort |

(3)PGI编译器 PGI编译器是The Portland Group推出的一款编译器产品,支持C、C++和 Fortran。此外,PGI编译器还支持HPF(High Performance Fortran,Fortran90的并 行扩展)编程语言,支持CUDA Fortran。现已经被NVIDIA收购。

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/intel/2017.5.239

[mabing@login08 ~]$ module purge

[mabing@login08 ~]$ module load compiler/pgi/20.9

[mabing@login08 ~]$ which pgf90

/public/software/compiler/nvidia/hpc_sdk/Linux_x86_64/20.9/compilers/bin/pgf90

[mabing@login08 ~]$ pgf90 --version

pgf90 (aka nvfortran) 20.9-0 LLVM 64-bit target on x86-64 Linux -tp p7

PGI Compilers and Tools

Copyright (c) 2020, NVIDIA CORPORATION. All rights reserved.

[mabing@login08 ~]$| 编程语言 | 编译器调用名称 |

|---|---|

| C | pgcc |

| C++ | pgCC/pgc++ |

| Fortran77 | pgf77 |

| Fortran90/95 | pgf90/pgf95 |

| HPF | pghpf |

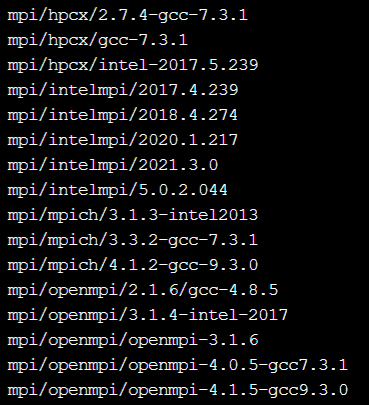

MPI(Message Passing Interface)是消息传递函数库的标准规范,不是一种编程语言,支持 Fortran和C、C++。 我们结合软件指导手册和用户使用习惯,module推出四类流行的MPI库,分别是intelmpi、hpcx、openmpi、mpich。

在加载mpi时,我们需要注意两点:

(1)依赖其他module。以mpi/openmpi/openmpi-4.1.5-gcc9.3.0为例,可理解为openmpi4.1.5由gcc9.3.0编译得到。因此当您需要使用mpi/openmpi/openmpi-4.1.5-gcc9.3.0时,需要先加载compiler/gcc/9.3.0。

[mabing@login08 ~]$ module purge

[mabing@login08 ~]$ module load compiler/gcc/9.3.0 mpi/openmpi/openmpi-4.1.5-gcc9.3.0

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/gcc/9.3.0 2) mpi/openmpi/openmpi-4.1.5-gcc9.3.0| 编程语言 | openmpi编译器调用名称 |

|---|---|

| C | mpicc |

| C++ | mpicxx |

| Fortran77 | mpif77/mpifort |

| Fortran90/95 | mpif90/mpifort |

[mabing@login08 ~]$ module purge

[mabing@login08 ~]$ module load compiler/intel/2017.5.239 mpi/intelmpi/2017.4.239

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/intel/2017.5.239 2) mpi/intelmpi/2017.4.239| 编程语言 | intelmpi2017编译器调用名称 |

|---|---|

| C | mpiicc |

| C++ | mpiicpc |

| Fortran77 | mpiifort |

| Fortran90/95 | mpiifort |

[mabing@login08 ~]$ module purge

[mabing@login08 ~]$ module load compiler/intel/2021.3.0 mpi/intelmpi/2021.3.0

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/intel/2021.3.0 2) mpi/intelmpi/2021.3.0| 编程语言 | intelmpi2021编译器调用名称 |

|---|---|

| C | mpiicc |

| C++ | mpiicpc |

| Fortran77 | mpiifort |

| Fortran90/95 | mpiifort |

[mabing@login08 ~]$ module purge

[mabing@login08 ~]$ module load compiler/devtoolset/7.3.1 mpi/hpcx/2.7.4-gcc-7.3.1

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/devtoolset/7.3.1 2) mpi/hpcx/2.7.4-gcc-7.3.1| 编程语言 | hpcx编译器调用名称 |

|---|---|

| C | mpicc |

| C++ | mpicxx |

| Fortran77 | mpif77/mpifort |

| Fortran90/95 | mpif90/mpifort |

(2)conflict冲突。继续以mpi/openmpi/openmpi-4.1.5-gcc9.3.0为例,执行module show mpi/openmpi/openmpi-4.1.5-gcc9.3.0,发现有一行为conflict mpi,这表示如果您加载mpi/openmpi/openmpi-4.1.5-gcc9.3.0便无法加载其他mpi,因为一个环境只允许存在一个mpi。

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/gcc/9.3.0 2) mpi/openmpi/openmpi-4.1.5-gcc9.3.0

[mabing@login08 ~]$ module load mpi/intelmpi/2021.3.0

mpi/intelmpi/2021.3.0(8):ERROR:150: Module 'mpi/intelmpi/2021.3.0' conflicts with the currently loaded module(s) 'mpi/openmpi/openmpi-4.1.5-gcc9.3.0'

mpi/intelmpi/2021.3.0(8):ERROR:102: Tcl command execution failed: conflict mpi

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/gcc/9.3.0 2) mpi/openmpi/openmpi-4.1.5-gcc9.3.0

[mabing@login08 ~]$ module show mpi/openmpi/openmpi-4.1.5-gcc9.3.0

-------------------------------------------------------------------

/public/software/modules/base/mpi/openmpi/openmpi-4.1.5-gcc9.3.0:

module load compiler/gcc/9.3.0

conflict mpi

module-whatis Mellanox HPC-X toolkit

setenv HPCX_DIR /public/software/mpi/openmpi

setenv HPCX_HOME /public/software/mpi/openmpi

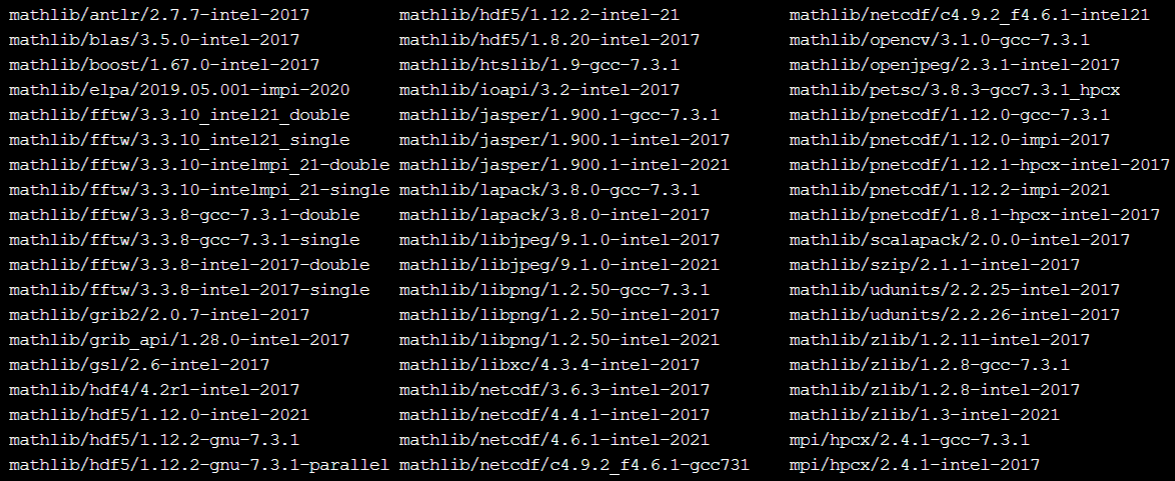

******** ***********module提供常用mathlib数学库,包括但不限于blas、boost、elpa、fftw、grib2、gsl、hdf4、hdf5、jasper、lapack、libjpeg、libpng、libxc、netcdf、petsc、pnetcdf、scalapack、zlib等。

加载mathlib数学库,我们需要注意2点:

(1)依赖其他module。以mathlib/fftw/3.3.10-intelmpi_21-single为例,这句module可理解为fftw3.3.10单精度由intelmpi2021编译得到。因此当您需要使用mathlib/fftw/3.3.10-intelmpi_21-single时,应先加载compiler/intel/2021.3.0、mpi/intelmpi/2021.3.0。

[mabing@login08 ~]$ module purge

[mabing@login08 ~]$ module load compiler/intel/2021.3.0 mpi/intelmpi/2021.3.0 mathlib/fftw/3.3.10-intelmpi_21-single

[mabing@login08 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/intel/2021.3.0 2) mpi/intelmpi/2021.3.0 3) mathlib/fftw/3.3.10-intelmpi_21-single

[mabing@login08 ~]$(2)module show定位数学库具体名称。module show mathlib/fftw/3.3.10-intelmpi_21-single,看到prepend-path LD_LIBRARY_PATH目录下有fftw相关静态库和动态库。

[mabing@login08 ~]$ module show mathlib/fftw/3.3.10-intelmpi_21-single

-------------------------------------------------------------------

/public/software/modules/base/mathlib/fftw/3.3.10-intelmpi_21-single:

conflict mathlib/fftw

module load compiler/intel/2021.3.0

module load mpi/intelmpi/2021.3.0

prepend-path LIBRARY_PATH /public/software/mathlib/fftw/3.3.10/single/intel/lib

prepend-path LD_LIBRARY_PATH /public/software/mathlib/fftw/3.3.10/single/intel/lib

prepend-path INCLUDE /public/software/mathlib/fftw/3.3.10/single/intel/include

prepend-path MANPATH /public/software/mathlib/fftw/3.3.10/single/intel/share/man

prepend-path PKG_CONFIG_PATH /public/software/mathlib/fftw/3.3.10/single/intel/lib/pkgconfig

-------------------------------------------------------------------

[mabing@login08 ~]$ ls /public/software/mathlib/fftw/3.3.10/single/intel/lib

cmake libfftw3f.la libfftw3f_mpi.la libfftw3f_mpi.so.3 libfftw3f_omp.a libfftw3f_omp.so libfftw3f_omp.so.3.6.10 libfftw3f.so.3 libfftw3f_threads.a libfftw3f_threads.so libfftw3f_threads.so.3.6.10

libfftw3f.a libfftw3f_mpi.a libfftw3f_mpi.so libfftw3f_mpi.so.3.6.10 libfftw3f_omp.la libfftw3f_omp.so.3 libfftw3f.so libfftw3f.so.3.6.10 libfftw3f_threads.la libfftw3f_threads.so.3 pkgconfig

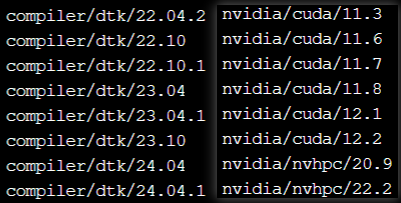

[mabing@login08 ~]$在提供nvidia显卡队列的中心(华东一区【昆山】、华东四区【山东】、华北一区【雄横】等),module提供cuda;在提供Z100、k100显卡队列的中心(华东一区【昆山】、西北一区【西安】、华东三区【乌镇】等),module提供dtk,无需用户自行安装。

当我们需要查看显卡利用率时,需要ssh带卡的计算节点,加载cuda或dtk,执行watch nvidia-smi或watch hy-smi才能看到显卡利用率,login登录节点没有显卡设备。

型号nvidia RTX 3080,加载nvidia/cuda/11.4,watch nvidia-smi查看利用率

[mabing@login01 gpu-test]$ ls

in.silica isi500.data lammps+gpu.slurm

[mabing@login01 gpu-test]$ sbatch lammps+gpu.slurm

Submitted batch job 11917545

[mabing@login01 gpu-test]$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

11917545 xhhgnormal lammps-DCU mabing R 0:04 1 e01r04

[mabing@login01 gpu-test]$ ssh e01r04

Last login: Sun Jan 12 18:51:40 2025 from login01

[mabing@e01r04 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/devtoolset/7.3.1 2) mpi/hpcx/gcc-7.3.1

[mabing@e01r04 ~]$ module load nvidia/cuda/11.4

[mabing@e01r04 ~]$ watch nvidia-smi

Sun Jan 12 18:56:27 2025

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 530.41.03 Driver Version: 530.41.03 CUDA Version: 12.1 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 3080 On | 00000000:67:00.0 Off | N/A |

| 30% 37C P2 105W / 320W| 266MiB / 10240MiB | 90% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 7894 C lmp_mpi 264MiB |

+---------------------------------------------------------------------------------------+型号Z100,加载compiler/dtk/24.04.1,watch hy-smi查看利用率

[mabing@login03 dcu_1_pme]$ ls

amber_na.ff ar-dbd_DNA_chain_C.itp ar-dbd_Ion_chain_A2.itp ar-dbd.ndx ar-dbd_Protein_chain_A.itp ar-dbd.top gmx-plumed.slurm npt.gro posre_Ion_chain_B2.itp prod.mdp prod.tpr

arbdb_prod.log ar-dbd_DNA_chain_D.itp ar-dbd_Ion_chain_B2.itp ardbd_prod.err ar-dbd_Protein_chain_B.itp bind.sh mdout.mdp posre_Ion_chain_A2.itp prod.cpt prod_prev.cpt prod.xtc

[mabing@login03 dcu_1_pme]$ sbatch gmx-plumed.slurm

Submitted batch job 79943338

[mabing@login03 dcu_1_pme]$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

79943338 kshdnormal gromacs mabing R 0:05 1 e07r1n06

[mabing@login03 dcu_1_pme]$ ssh e07r1n06

Warning: Permanently added 'e07r1n06,10.5.7.7' (ECDSA) to the list of known hosts.

Last login: Thu Dec 26 15:32:09 2024

[mabing@e07r1n06 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/devtoolset/7.3.1 2) mpi/hpcx/2.11.0/gcc-7.3.1 3) compiler/rocm/dtk-22.10.1

[mabing@e07r1n06 ~]$ module unload compiler/rocm/dtk-22.10.1

[mabing@e07r1n06 ~]$ module load compiler/dtk/24.04.1

[mabing@e07r1n06 ~]$ watch hy-smi

Every 2.0s: hy-smi Sun Jan 12 19:13:48 2025

============================ System Management Interface =============================

======================================================================================

DCU Temp AvgPwr Perf PwrCap VRAM% DCU% Mode

0 51.0C 75.0W auto 300.0W 18% 96% N/A

======================================================================================

=================================== End of SMI Log ===================================当我们需要在eshell内使用docker时,singularity是个更好的选择,功能类似docker,但对集群来说更安全。

singularity pull下载.sif镜像文件,下载后通过singularity shell 交互式启动并进入容器操作,图中容器已为我们集成好python等程序

[mabing@login03 souporcell]$ ls

[mabing@login03 souporcell]$ module load singularity/3.7.3

[mabing@login03 souporcell]$ which singularity

/public/software/apps/singularity/3.7.3/bin/singularity

[mabing@login03 souporcell]$ singularity pull --arch amd64 library://wheaton5/souporcell/souporcell:release

INFO: Downloading library image

2.1GiB / 2.1GiB [====================================================================] 100 % 6.0 MiB/s 0s

WARNING: integrity: signature not found for object group 1

WARNING: Skipping container verification

[mabing@login03 souporcell]$ ll

total 2181416

-rwxrwxr-x 1 mabing mabing 2233769984 Jan 12 19:42 souporcell_release.sif

[mabing@login03 souporcell]$ singularity shell ./souporcell_release.sif

Singularity> which python

/miniconda3/bin/python

Singularity> python --version

Python 3.10.13

Singularity>singularity shell 交互式启动预置的高版本glibc2.29、glibc2.34的.sif镜像,.sif目录在/public/software/apps/DeepLearning/singularity

[mabing@login08 singularity]$ pwd

/public/software/apps/DeepLearning/singularity

[mabing@login08 singularity]$ ll

total 10164156

-rwxr-xr-x 1 root root 1975029760 Dec 4 2023 centos7.6-mpi4.0-gcc9.3-cmake3.21-make4.2-glibc2.29-glibcxx3.4.26-py3.8-dtk23.04.1.sif

-rwxr-xr-x 1 root root 2476720128 Dec 4 2023 centos7.6-mpi4.0-gcc9.3-cmake3.21-make4.2-glibc2.29-glibcxx3.4.26-py3.8-dtk23.10.sif

-rwxr-xr-x 1 root root 548773888 Dec 4 2023 centos7.6-mpi4.0-gcc9.3-cmake3.21-make4.2-glibc2.29-glibcxx3.4.26-py3.8.sif

-rwxr-xr-x 1 root root 5407571968 May 23 2024 ubuntu20.04-mpi4.0-gcc9.4-cmake3.19-mkl-py3.10-v0.1.sif

[mabing@login08 singularity]$ module load singularity/3.7.3

[mabing@login08 singularity]$ singularity shell centos7.6-mpi4.0-gcc9.3-cmake3.21-make4.2-glibc2.29-glibcxx3.4.26-py3.8.sif

Singularity> ldd --version

ldd (GNU libc) 2.29

Copyright (C) 2019 Free Software Foundation, Inc.

This is free software; see the source for copying conditions. There is NO

warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

Written by Roland McGrath and Ulrich Drepper.

Singularity>singularity exec 脚本模式给tensorflow2.11、starccm+24等要求高版本glibc的软件提供了灵活和安全的运行环境。将以下脚本保存,sbatch提交脚本,可实现作业运行

#!/bin/bash

#SBATCH -J STAR #作业名

#SBATCH -p kshcnormal #队列名

#SBATCH --ntasks-per-node=32 #每节点进程数

#SBATCH -N 1

#SBATCH -o %j.out

#SBATCH -e %j.err

module load singularity/3.7.3

WDIR=`pwd` #获取当前目录

cd $WDIR

##################################################################

NP=$SLURM_NPROCS

NNODE=`srun hostname |sort |uniq | wc -l`

LOG_FILE=$WDIR/job_${NP}c_${NNODE}n_$SLURM_JOB_ID.log

HOSTFILE=$WDIR/hosts_$SLURM_JOB_ID

srun hostname |sort |uniq -c |awk '{printf "%s:%s\n",$2,$1}' > $HOSTFILE

###############运行主程序

singularity exec /public/software/apps/DeepLearning/singularity/centos7.6-mpi4.0-gcc9.3-cmake3.21-make4.2-glibc2.29-glibcxx3.4.26-py3.8.sif bash -c \

"export DISPLAY=vncserver04:4 \

&& export FI_PROVIDER=verbs \

&& export CDLMD_LICENSE_FILE=/public/home/username/software/starccm19-install/19.04.009-R8/license.dat \

&& /public/home/username/software/starccm19-install/19.04.009-R8/STAR-CCM+19.04.009-R8/star/bin/starccm+ -machinefile $HOSTFILE -np $NP -rsh ssh \

-mpidriver intel "简介

sbatch用于提交一个批处理作业脚本到Slurm。用户使用sbatch命令提交作业脚本,其基本格式为sbatch jobfile。jobfile为作业脚本文件,文件中指定资源请求和任务内容。sbatch命令在脚本正确传递给作业调度系统后立即退出,同时获取到一个作业号。作业等所需资源满足后开始运行,调度系统将在所分配的第一个计算节点(而不是登录节点)上加载执行用户的作业脚本。HPC系统上使用最多的提交作业方式,几乎所有不需要交互的场景都是用sbatch进行提交。

sbatch的常用命令选项

| 参数 | 功能 |

|---|---|

-J,-job-name=<jobname> | 赋予作业的作业名为<jobname> |

-n 或 --ntasks=number | 指定要运行的任务数,默认每个任务一个处理器核,仅对作业起作用,不对作业步起作用。 |

-N 或 --nodes=nnodes | 请求为作业分配nnodes个节点。如果作业的请求节点个数超出了分区中配置的节点数目,作业将被拒绝。 |

-p 或 --partition=partition_name | 在指定分区中分配资源。如未指定,则在系统默认分区中分配资源。 |

-ntasks-per-node | 指定每个节点运行进程数 |

-cpu_per_task=<count> | 指定任务需要的处理器数目 |

-c 或 --cpus-per-task=ncpus | 作业步的每个任务需要 ncpus 个处理器核。若未指定该选项,则默认为每个任务分配一个处理器核。 |

-t | 指定作业执行时间,若超过该时间,作业将会被杀死。时间格式为:minutes、minutes:seconds、hours:minutes:seconds、days-hours、days- hours:minutes和days-hours:minutes:seconds。 |

-o | 指定作业标准输出文件的名称,不能使用shell环境变量 |

-e | 指定作业标准错误输出文件的名称,不能使用shell环境变量 |

-w 或 --nodelist=nodenamelist | 请求指定的节点名称列表。列表可以用逗号分隔的节点名或节点范围(如 h02r1n[00-19])指定。 |

--mem=<size[units]> | 指定作业在每个节点使用的内存限制。 |

-d, --dependency=<dependency_list> | 作业依赖关系设置 |

--exclusive | 作业独占节点,作业运行过程中不允许其他作业使用节点,即使节点核心未用满。 |

--gres=<list> | 指定每个节点使用的通用资源名称及数量 eg:--gres=gpu:2 表示本作业使用gpu卡,且每个节点使用2卡 |

-x,-exclude=<host1,host2,…or filename> | 在特定<host1,host2>节点或filename文件中指定的节点上运行。 |

-ntasks-per-socket=<ntasks> | 每颗CPU运行<ntasks>个任务,需与-n,-ntasks=<number>配合,并自动绑定<ntasks>个任务到每颗CPU。仅对作业起作用,不对作业步起作用。 |

--mem-per-cpu=<size> | 分配的CPU需要的最小内存 |

-no-requeue | 任何情况下都不重新运行 |

-h, --help | 查看帮助说明 |

使用示例

#!/bin/bash

#SBATCH –J TestSerial

#SBATCH -p xahcnormal

#SBATCH -N 1

#SBATCH –n 1

#SBATCH -o log/%j.loop

#SBATCH -e log/%j.loop

echo “SLURM_JOB_PARTITION=$SLURM_JOB_PARTITION”

echo “SLURM_JOB_NODELIST=$SLURM_JOB_NODELIST”

srun ./lmp_serial 1000000Intel MPI支持多种方式与作业调度集成,其中最推荐的方式是使用srun直接启动任务(Intel MPI Library 4.0 Update 3开始支持这种方式),启动前只需要设置I_MPI_PMI_LIBRARY环境变量到SLURM的libpmi.so库(默认安装位置/usr/lib64/libpmi.so)

#!/bin/bash

#SBATCH -J wrf

#SBATCH --comment=WRF

#SBATCH -N 2

#SBATCH -n 128

#SBATCH -p xahcnormal

#SBATCH -o %j

#SBATCH -e %j

export I_MPI_PMI_LIBRARY=/opt/gridview/slurm/lib/libpmi.so

module load compiler/intel/2017.5.239

module load mpi/intelmpi/2017.2.174

export FORT_BUFFERED=1

srun --cpu_bind=cores --mpi=pmi2 ./wrf.exe作业环境变量

| 名称 | 含义 | 类型 | 示例 |

|---|---|---|---|

SLURM_JOB_ID | 作业id,即作业调度系统为作业分配的作业号,可用于bjobs等命令 | 数值 | hostfile=" ma.$SLURM_JOB_ID" 使用$SLURM_JOB_ID定义machinefile,指定mpi节点文件 |

SLURM_JOB_NAME | 作业名称,即-J 选项指定的名称 | 字符串 | mkdir ${SLURM_JOB_NAME} 根据作业名称创建临时工作目录 |

SLURM_JOB_NUM_NODES | 作业分配到的节点总数 | 数值 | echo $SLURM_JOB_NUM_NODES |

SLURM_JOB_NODELIST | 作业被分配到的节点列表 | 字符串 | echo $SLURM_JOB_NODELIST |

SLURM_JOB_PARTITION | 作业被分配到的队列名 | 字符串 | echo $SLURM_JOB_PARTITION |

无论通过salloc分配模式提交作业,还是sbatch批处理模式提交作业,可在计算节点通过命令行监控资源利用情况。login登录节点无法交互式监控。

ssh计算节点,执行top -u username查看cpu利用率,当每核利用率越接近100%,代表利用率越高;执行free -h查看内存利用情况,当used越接近total大小,越容易内存溢出。

[mabing@login06 lammps-test]$ ls

in.silica isi500.data lammps.slurm

[mabing@login06 lammps-test]$ cat lammps.slurm

#!/bin/bash

#SBATCH -J lammps

#SBATCH -p xahcnormal

#SBATCH -N 1

#SBATCH -n 8

module purge

module load lammps/23Jun2022-intel_21

mpirun -np 8 lmp_mpi -i in.silica

[mabing@login06 lammps-test]$ sbatch lammps.slurm

Submitted batch job 27593262

[mabing@login06 lammps-test]$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

27593262 xahcnormal lammps mabing R 0:03 1 b02r4n06

[mabing@login06 lammps-test]$ ssh b02r4n06

top - 20:57:18 up 9 days, 21:13, 1 user, load average: 31.90, 27.79, 24.78

Tasks: 838 total, 33 running, 805 sleeping, 0 stopped, 0 zombie

%Cpu(s): 96.4 us, 3.6 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem : 13176091+total, 23336808 free, 28969952 used, 79454144 buff/cache

KiB Swap: 16775164 total, 16675324 free, 99840 used. 10036424+avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

11214 mabing 20 0 1738096 243972 33016 R 99.7 0.2 4:05.40 lmp_mpi

11216 mabing 20 0 1753688 266328 32848 R 99.7 0.2 4:04.73 lmp_mpi

11217 mabing 20 0 1738156 247992 32892 R 99.7 0.2 4:05.88 lmp_mpi

11223 mabing 20 0 1738552 247612 32600 R 99.7 0.2 4:04.90 lmp_mpi

11220 mabing 20 0 1753468 262140 32904 R 99.3 0.2 4:06.62 lmp_mpi

11221 mabing 20 0 1738484 243612 32788 R 99.3 0.2 4:05.56 lmp_mpi

11222 mabing 20 0 1753660 260092 32796 R 99.3 0.2 4:04.70 lmp_mpi

11213 mabing 20 0 1752084 260724 32996 R 98.0 0.2 4:05.15 lmp_mpi

13730 mabing 20 0 179172 3632 2060 R 0.3 0.0 0:00.84 top

[mabing@b02r4n06 ~]$ free -h

total used free shared buff/cache available

Mem: 125G 27G 22G 304M 75G 95G

Swap: 15G 97M 15G

[mabing@b02r4n06 ~]$型号nvidia RTX 3080,加载nvidia/cuda/11.4,watch nvidia-smi查看利用率

[mabing@login01 gpu-test]$ ls

in.silica isi500.data lammps+gpu.slurm

[mabing@login01 gpu-test]$ sbatch lammps+gpu.slurm

Submitted batch job 11917545

[mabing@login01 gpu-test]$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

11917545 xhhgnormal lammps-DCU mabing R 0:04 1 e01r04

[mabing@login01 gpu-test]$ ssh e01r04

Last login: Sun Jan 12 18:51:40 2025 from login01

[mabing@e01r04 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/devtoolset/7.3.1 2) mpi/hpcx/gcc-7.3.1

[mabing@e01r04 ~]$ module load nvidia/cuda/11.4

[mabing@e01r04 ~]$ watch nvidia-smi

Sun Jan 12 18:56:27 2025

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 530.41.03 Driver Version: 530.41.03 CUDA Version: 12.1 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 3080 On | 00000000:67:00.0 Off | N/A |

| 30% 37C P2 105W / 320W| 266MiB / 10240MiB | 90% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 7894 C lmp_mpi 264MiB |

+---------------------------------------------------------------------------------------+型号Z100,加载compiler/dtk/24.04.1,watch hy-smi查看利用率

[mabing@login03 dcu_1_pme]$ ls

amber_na.ff ar-dbd_DNA_chain_C.itp ar-dbd_Ion_chain_A2.itp ar-dbd.ndx ar-dbd_Protein_chain_A.itp ar-dbd.top gmx-plumed.slurm npt.gro posre_Ion_chain_B2.itp prod.mdp prod.tpr

arbdb_prod.log ar-dbd_DNA_chain_D.itp ar-dbd_Ion_chain_B2.itp ardbd_prod.err ar-dbd_Protein_chain_B.itp bind.sh mdout.mdp posre_Ion_chain_A2.itp prod.cpt prod_prev.cpt prod.xtc

[mabing@login03 dcu_1_pme]$ sbatch gmx-plumed.slurm

Submitted batch job 79943338

[mabing@login03 dcu_1_pme]$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

79943338 kshdnormal gromacs mabing R 0:05 1 e07r1n06

[mabing@login03 dcu_1_pme]$ ssh e07r1n06

Warning: Permanently added 'e07r1n06,10.5.7.7' (ECDSA) to the list of known hosts.

Last login: Thu Dec 26 15:32:09 2024

[mabing@e07r1n06 ~]$ module list

Currently Loaded Modulefiles:

1) compiler/devtoolset/7.3.1 2) mpi/hpcx/2.11.0/gcc-7.3.1 3) compiler/rocm/dtk-22.10.1

[mabing@e07r1n06 ~]$ module unload compiler/rocm/dtk-22.10.1

[mabing@e07r1n06 ~]$ module load compiler/dtk/24.04.1

[mabing@e07r1n06 ~]$ watch hy-smi

Every 2.0s: hy-smi Sun Jan 12 19:13:48 2025

============================ System Management Interface =============================

======================================================================================

DCU Temp AvgPwr Perf PwrCap VRAM% DCU% Mode

0 51.0C 75.0W auto 300.0W 18% 96% N/A

======================================================================================

=================================== End of SMI Log ===================================